Good afternoon VMware virtualization enthusiasts and Hyper-V users whom Microsoft has condoned on your behalf that you don’t have a need for hot migration if you have an intern and $50,000 cash.

Simon Long has shared with us this fantastic article he wrote regarding VMotion performance. More specifically, fine tuning concurrent VMotions allowed by vCenter. This one is going in my document repository and tweaks ‘n’ tricks collection. Thank you Simon and everyone please remember that virtualization is not best enjoyed in moderation!

Simon can be reached via email at contact (at) simonlong.co.uk as well as @SimonLong_ on Twitter.

I’ll set the scene a little….

I’m working late, I’ve just installed Update Manager and I‘m going to run my first updates. Like all new systems, I’m not always confident so I decided “Out of hours” would be the best time to try.

I hit “Remediate” on my first Host then sat back, cup of tea in hand and watch to see what happens….The Host’s VM’s were slowly migrated off 2 at a time onto other Hosts.

“It’s gonna be a long night” I thought to myself. So whilst I was going through my Hosts one at time, I also fired up Google and tried to find out if there was anyway I could speed up the VMotion process. There didn’t seem to be any article or blog posts (that I could find) about improving VMotion Performance so I created a new Servicedesk Job for myself to investigate this further.

3 months later whilst at a product review at VMware UK, I was chatting to their Inside Systems Engineer, Chris Dye, and I asked him if there was a way of increasing the amount of simultaneous VMotions from 2 to something more. He was unsure, so did a little digging and managed to find a little info that might be helpful and fired it across for me to test.

After a few hours of basic testing over the quiet Christmas period, I was able to increase the amount of simultaneous VMotions…Happy Days!!

But after some further testing it seemed as though the amount of simultaneous VMotions is actually set per Host. This means if I set my vCenter server to allow 6 VMotions, I then place 2 Hosts into maintenance mode at the same time, there would actually be 12 VMotions running simultaneously. This is certainly something you should consider when deciding how many VMotions you would like running at once.

Here are the steps to increase the amount of Simultaneous VMotion Migrations per Host.

1. RDP to your vCenter Server.

2. Locate the vpdx.cfg (Default location “C:\Documents and Settings\All Users\Application Data\VMware\VMware VirtualCenter”)

3. Make a Backup of the vpxd.cfg before making any changes

4. Edit the file in using WordPad and insert the following lines between the <vpdx></vpdx> tags;

<ResourceManager>

<maxCostPerHost>12</maxCostPerHost>

</ResourceManager>

5. Now you need to decide what value to give “maxCostPerHost”.

A Cold Migration has a cost of 1 and a Hot Migration aka VMotion has a cost of 4. I first set mine to 12 as I wanted to see if it would now allow 3 VMotions at once, I now permanently have mine set to 24 which gives me 6 simultaneous VMotions per Host (6×4 = 24).

I am unsure on the maximum value that you can use here, the largest I tested was 24.

6. Save your changes and exit WordPad.

7. Restart “VMware VirtualCenter Server” Service to apply the changes.

Now I know how to change the amount of simultaneous VMotions per Host, I decided to run some tests to see if it actually made any difference to the overall VMotion Performance.

I had 2 Host’s with 16 almost identical VM’s. I created a job to Migrate my 16 VM’s from Host 1 to Host 2.

Both Hosts VMotion vmnic was a single 1Gbit nic connected to a CISCO Switch which also has other network traffic on it.

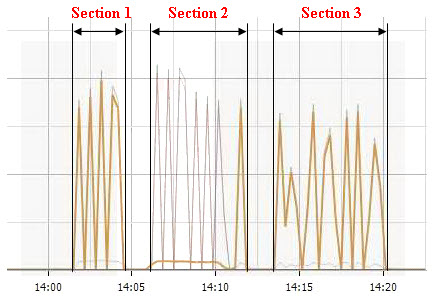

The Network Performance graph above was recorded during my testing and is displaying the “Network Data Transmit” measurement on the VMotion vmnic. The 3 sections highlighted represent the following;

Section 1 – 16 VM’s VMotioned from Host 1 to Host 2 using a maximum of 6 simultaneous VMotions.

Time taken = 3.30

Section 2 – This was not a test, I was simply just migrating the VM’s back onto the Host for the 2nd test (Section 3).

Section 3 – 16 VM’s VMotioned from Host 1 to Host 2 using a maximum of 2 simultaneous VMotions.

Time taken = 6.36

Time Different = 3.06

3 Mins!! I wasn’t expecting it to be that much. Imagine if you had a 50 Host cluster…how much time would it save you?

I tried the same test again but only migrating 6 VM’s instead of 16.

Migrating off 6 VM’s with only 2 simultaneous VMotions allowed.

Time taken = 2.24

Migrating off 6 VM’s with 6 simultaneous VMotions allowed.

Time taken = 1.54

Time Different = 30secs

It’s still an improvement all be it not so big.

Now don’t get me wrong, these tests are hardly scientific and would never have been deemed as completely fair test but I think you get the general idea of what I was trying to get at.

I’m hoping to explore VMotion Performance further by looking at maybe using multiple physical nics for VMotion and Teaming them using EtherChannel or maybe even using 10Gbit Ethernet. Right now I don’t have the spare Hardware to do that but this is definitely something I will try when the opportunity arises.

Update 4/5/11: Limit Concurrent vMotions in vSphere 4.1 by Elias Khnaser.

Update 10/3/12: Changes to vMotion in vSphere 4.1 per VMware KB 1022851:

- Migration with vMotion and DRS for virtual machines configured with USB device passthrough from an ESX/ESXi host is supported

-

Fault Tolerant (FT) protected virtual machines can now vMotion via DRS. However, Storage vMotion is unsupported at this time.

Note: Ensure that the ESX hosts are at the same version and build.

- 4 concurrent vMotion operations per host on a 1Gb/s network

- 8 concurrent vMotion operations per host on a 10Gb/s network

- 128 concurrent vMotion operations per VMFS datastore

Note: Concurrent vMotion operation is currently supported only when source and destination hosts are in the same cluster. For further information, see the Configuration Maximums for VMware Sphere 4.1 document.

The vSphere 4.1 configuration maximums above remain true for vSphere 5.x. Enhanced vMotion operations introduced in vSphere 5.1 also count against the vMotion maximums above as well as the Storage vMotion configuration maximums (8 concurrent Storage vMotions per datastore and 2 concurrent Storage vMotions per host as well as 8 concurrent non-vMotion provisioning operations per host). Eric Sloof does a good of explaining that here.

Nice write up and good find Jason!! It will be interesting to see if the community runs with this and comes up with a better number to have things set.

Steve

If you have only two hosts load balancing on ip(etherchannel) will not do much I guess. Source and Destination will always be the same, the same route is chosen every single time.

10GB ethernet might be worth checking out and should definitely shorten the time. Maybe when you have the time also look into enabling jumbo frames and see if that makes any difference?

Good article,

Duncan

Yellow-Bricks.com

NICE!

Great work. I’ll delete mine from the drafts folder… as this… this is impressive and a half.

If you want to use two NIC’s in a IP etherchannel the VMKernel will only use one at the time. You can change this by adding the MaxActive = “2” on the VMKernel in the esx.conf file:

/net/vswitch/child[000x]/portgroup/child[000x]/teamPolicy/maxActive = “2”

Note: change the x for the right numbers to match the VMKernel.

This works great for the iSCSI VMkernel and should also work for the VMotion VMkernel, but did not tested it with VMotion.

Thanks Harry, im hopefully going to give Etherchannel and Jumbo Frames a go soon, so i’ll make sure i write up my findings.

Simon

Hi, nice work !

BTW, did someone know the “cost” of a storage vmotion ?

Good question, i will look into it. I will hopefully soon have a small test environment that i can use to test Jumbo Frames, Etherchannel, and now Storage vMotion.

Simon

By default, it seams to be limited to 2 SVMotion simultaneously.

I’ve changed my configuration allow 6 simultaneous VMotions, if i get time later, i’ll test this for you.

Simon

Hi Nitro, i’ve just tested and i was able to run 4 simultaneous Storage VMotions at once, so i’m guessing that the setting i have change also have the same effect on Storage VMotion 🙂

Simon

You’re right, i’ve tested too 🙂

Thanks again !

I’d be interested to know if this affects downtime of the VM. I guess a good test is ping. Typically with a standard Windows ping, 1 packet at least is lost while the VM’s host switches, and it varies depending on how quickly the memory delta is changing. I wonder if adjusting this value could negatively affect that?

From what I understand, this is how a VMotion occurs:

1) Pre-migration: set up target host, etc…

2) Copy memory, keep track of changes

3) Pause the VM

4) Copy the memory delta

5) Resume the VM on the target host

Obviously you want step 4 to happen as quickly as possible for minimal downtime. I agree that it takes less time to migrate 6 VMs at once than 2 at a time, but the migration time per-VM increases.

With your example above:

6 VMs at once = 1.54 min migration time for each VM

2 VMs at once = 0.75 min migration time for each VM (2.24 / 3)

So if we assume that step 4 above is typically a percentage of the total migration time, it’s possible you’ve doubled the downtime of the VM during the migration.

Maybe there are syslog messages generated during a VMotion and would be more accurate than a ping to see the exact time a VM was stopped and started.

Just a thought…

PS – This is a good PDF for a technical explanation of VMotion: http://www.vmware.com/pdf/usenix_vmotion.pdf

Justin, i guess you’ll find what you need in the vmware.log of the vm migrated with vmotion (it’s divided in 14 steps if i remember well).

Hi Justin, its a really good question and personally i haven’t run a ping on the servers whilst i’ve VMotioned them.

But having said that, i have been using 6 simultanious VMotions on a new 2 Host cluster in which i have regularly put either host into maintenance mode thus VMotioning 20+ VM’s. Whilst this process was running we recieved 0 downtime on any of our systems running in the Cluster.

In step 4 of the VMotion process when the Memory Delta is copied between Hosts, i would agree that ideally this needs to be as quick as possible and if there is less bandwidth avaliable this could cause downtime.

But surely running a memory hungry app e.g. Exchange is just as much of an issue? When a VMotion is performed on a VM using a massive ammount of memory the Memory Delta is going to be a LOT bigger than a VM just idling. So in theroy should take a lot longer to transfer the Delta.

For Example;

Slow VMotion due to 6 simultanious VMotions limiting available bandwidth:

Delta = 100MB Transfered at 100Mbit/s = 8.39s

Slow VMotion due to Large Delta from memory hungry app but with normal available bandwidth:

Delta = 1GB Transfered at 1Gbit/s = 8.59s

As far as i know (correct me if im wrong), running a memory hungry application as yet hasn’t caused downtime on a VM Guest OS whilst in VMotion.

If i get a spare moment i might run a Memory Stress Test app whilst running multiple VMotions and see if the ping drops out. If anyone else has a bit of spare time on their hands…feel free to test it for me 🙂

With regards to your questioning of the per-VM VMotion times you are correct, it does seem to take less time for each individual VM. But i think you need to question whether you’d prefer a faster per-VM time or a faster overal process time.

Simon

This is interesting but somewhat counter-intuitive to me.

I’ve often had discussions with folks on the optimal concurrent VMotion setting, and had always worked on the basis that “less is more”. By that, I mean that if you have a set amount of bandwidth on your VMotion network, having multiple VMs migrating at once will cause each one to run slower, as they’re competing for bandwidth to transfer memory state. In turn, the longer a VM takes to transfer, the more time it leaves for memory pages to go stale and have to be retransmitted. Thus, the longer a VMotion takes, the more data has to be transmitted over the VMotion network.

This always led me to believe that having VMotions occur in series rather than in parallel would yield the best results.

I guess there are other factors at play here, and I need to go back and re-assess (and test!).

Hi Rob, i agree in theory with what you are saying, I would be really interested to hear any test results that you come back with.

I know that my tests were not as controlled as they should be.

Regards

Simon (www.simonlong.co.uk)

The plot provided showing network performance does not display the value for the vertical axis. I’m curious as to how much of the vMotion network bandwidth was utilized when performing 6 concurrent vMotions. I’m presuming (based on plot) you were utilizing 60-80% of a 1GB pipe.

We just tried this procedure on a vSphere (ESX4/vCenter4) system and we could not get it to work. I assume that the vpdx s/b vpxd. We changed the maxCostPerHost to 16 then tried to VMotion 4 VMs. They VMotioned 1 at a time. Is there some other method for enabling this in vSphere?

I wish to modify my previous post. When we tried to manually VMotion only 1 VM VMotioned at a time. However when we created a DRS Cluster and retried the experiment it worked as advertised both manually and automatically.

Sorry for the confusion and thanks for the initial posting!

I followed the instructions:

Edited the “C:\Documents and Settings\All Users\Application Data\VMware\VMware VirtualCenter\vpdx.cfg” to values: 24, 32, 48; and restrted the “VMware VirtualCenter Server” Service.

Then from the vClient (where I set 8 VMs as one group on DRS cluster level), I tried to migrate 8 (and also 5) VMs simultaneously, and saw them migrating up-to 2 at the time.

My setup includes 2 esx4.1 servers connected back to back via 10GE, and I’m mostly interested in VM migration (less with storage migration).

Additional server runs the vCenter (win2008server-64).

I wonder if someone can send me detailed instructions (or even better – a script) for several concurrent VMs migration?

Ofer

Hello,

we followed your instruction but did not get the estimated result. We have got a two node cluster with vSphere 4.10, 320092

After changing the config, the maximum of the simultaneous transfered vms stayed at 4. Is there anything we have to change? The dedicated vMotion Ethernet Connection has 1GBit.

Many greetings

Heiko

@Heiko I did some testing a few weeks ago myself and came to the conclusion that this setting in the vpxd.cfg file has been deprecated in vSphere 4.1. Furthermore, simultanous vMotions are capped at 4 between 2 hosts. I’m going to assume that the cap actually applies at each host endpiont rather than at a pair of hosts. ie. all of the below could be ocurring simultaneously (again, this should be tested/proven before considered gospel):

1Gbhost A -> 1Gbhost B = 4 concurrent vMotions

1Gbhost C -> 1Gbhost D = 2 concurrent vMotions

1Gbhost C -> 1Gbhost E = 2 concurrent vMotions

1Gbhost D -> 1Gbhost E = 2 concurrent vMotions

Similarly, the cap at 10GbE I’m assuming is going to be 8 concurrent vMotions as this is the supported limit VMware has published for 10GbE in vSphere 4.1.

ie. all of the below could be ocurring simultaneously (again, this should be tested/proven before considered gospel):

10Gbhost A -> 10Gbhost B = 8 concurrent vMotions

10Gbhost C -> 10Gbhost D = 4 concurrent vMotions

10Gbhost C -> 10Gbhost E = 4 concurrent vMotions

10Gbhost D -> 10Gbhost E = 4 concurrent vMotions

With 10G, i have moved six large VMs (each 8GB active memory) which are highly active memory regions (48GB) in less than 100sec.

You need Cisco UCS though, they have Ethernet QOS with their FCOE based UCS architecture (blade servers).

Thanks for sharing.

Very nice article thanks a lot